The Self-Correcting Dimension

Imagine handling multiple sources of similar data without an industry standard to clearly identify what the data represents. Many professions utilize standardized coding systems, such as diagnosis codes, ISO codes, state codes, and ZIP codes. However, this situation, each data source identifies its content unambiguously but uses a unique system, creating challenges in harmonizing the information.

Solution

This is a common scenario in the media industry. A network typically receives data throughout the day, containing viewership information from various sources, yet lacking a standardized method to identify the product (such as episodes, sports events, advertisements).

A prior blog explored the use of hash functions for generating surrogate keys, enabling a streamlined single-step load from a source system to a Fact table. This approach ensures new viewership data becomes accessible immediately following the load process.

Essentially, this is a Type 1 dimension with a self-referencing foreign key (the Gold Key). The latter is used to maintain the attributes on the row. It would be a Type 3 if you keep raw, original attributes on the row as well. The dimension Gold Key is sometimes used when creating aggregate tables. All queries always use the Fact foreign key and the attributes on that row.

After Facts are loaded, the next step is to clean up the Dimensions. The Product Dimension is central to all analysis. This encompasses all Video product, both linear and digital, streamed or transmitted to an audience. Its a lot of data and it is critical to reliably identify the specific Product when compiling audience behavior. Part of this analysis involves time shifting due to VOD and streaming services. Getting a Product identity correct is key.

Dimension cleanup occurs on a regular basis. In the case of Product, AI is used to sort things out. Driven by an internal Product Master (gold record), the process matches references and updates the Dimension. Each update is tagged with a confidence level, allowing the system to reprocess those that do not achieve an expected level. This process allows the Dimension information to improve without requiring adjustments to any Atomic Fact Tables.

Process

The load process has 3 basic phases for the data:

- Registration

- Loading

- Clean up

Any new data set must be handled in this manner.

The Registration Phase

In this phase a new data set is examined to determine if it has already been received and registered. This phase will vary depending on the data source. A simple check would be to compare file signatures over time, while others may require filtering on timestamps, others may be more challenging.

Eventually the data set is either rejected or accepted. It is logged, assigned a batch number, and queued for loading. Many data transfer tools supply their own ‘batch number’ which can serve as a surrogate key to a Batch dimension. This can carry all sorts of information about the batch and the data load. If you have strict auditing requirements, you may introduce a ‘load number’ to track the loading of multiple batches in one job.

Information can include timestamps, row counts and measure aggregations collected in later phases. Also reasons if it is rejected and final disposition of the data.

The Load Phase

In broadcast (linear) television, Product is identified by four characteristics:

- Title – The name of the recurring program series

- Season – The broadcast season

- Episode – A specific product being broadcast

- Version – Altered versions of the original work. This includes clips, letterboxed, dubbed, and other types of post-production changes.

Before digital, each scheduled program was assigned a set of codes, the TMS ID. This system was created by Times-Mirror (now Nielson) to manage their publication of TV Guide. Now on-line services offer multiple ways to watch programming, and most of them do not subscribe to the TMS system. Those that do not use TMS codes identify a product using textual descriptions, proprietary codes, or whatever. All feeds provide text fields with separate columns for title, season and episode, but vary in content (spelling, abbreviations, numbers, etc.).

Version is an internal designation and not provided by 3rd party sources. It is not a concern for viewership analysis.

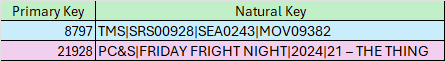

So, while one feed may use TMS standard codes and identify Title as “SRS00928”, Season as “SEA0243” and Episode as “MOV09382”, another feed may contain “Friday Fright Night”, “2024”, “21 – The Thing”. This gives you two different natural keys:

Each natural key is prefixed with an authority code. ‘TMS’ indicates the TMS coding system, and ‘PC&S’ represents a regional cable system, Paper Cup & String. These strings hash into two different BIGINT primary keys. Which leads to two different rows in the Dimension.

If the data feed is consistent, future data for the same source and product will reference the same Dimension row. This allows you to collect an accurate record of what occurred. The Fact row remains unchanged as you would never need to re-key or adjust the fact table.

The Clean Up Phase

At this point, the Fact load has completed. Users are now able to access the new facts, but not new dimension rows. By default, fact rows referencing new dimension rows will be ignored until the new dimension rows are added.

The cleaning process can be as convoluted as it needs it to be, but this process should not directly interfere with data access or the ability to perform good analysis. The next section will look into steps that need to be considered in such a process.

Cleaning the Dimension

Status of the Row

One thing the row must provide is a current rating of the quality of the data. This is reflected by the level of confidence (LOC) assigned to the row by the process. This will give the analyst a means of filtering out less reliable measures. It is also used by the cleansing process to identify which rows need cleaning.

Inferring a New Row

Identify new dimension rows from your data source and generate inferred rows populating as many attributes as possible with the data received. All such rows are assigned a level of confidence of zero.

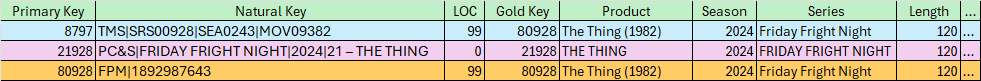

Below is a sample of selected rows to illustrate the table.

The image shows row #8797. This row was loaded previously and was cleaned, showing a LOC value of 99 (high confidence), and the Gold Key references its clean match, row #80928.

Row #21928 is a new inferred row with a LOC value of zero (no confidence), and the Gold Key column references itself. On that row, the attributes are as received from the source data.

The third row is the ‘golden record’ with attributes loaded from the Product Master.

Cleaning the Row

There are many different tools available to clean data. I’ve seen Master Data Management (MDM) tools used to identify customers and, in this case, an AI model to match raw data to the corporate master. Both can do the job and provide a confidence level. The latter is supplemented by an on-line process to resolve close ties, which also helps improve the AI model, reducing the effort managing the table.

The process also maintains a history of previous rows for audit and troubleshooting purposes. This was very useful in developing the AI model and measuring improvements as its development proceeded.

The dimension row itself may need to carry some ‘original’ attributes to allow support of review and rematching of unconfident matches. This turned out to be invaluable in shaking out the matching process. In practice, the original columns are hidden from normal users and only exposed to those involved in master data management.

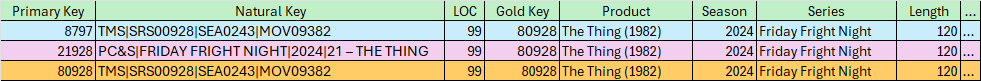

After cleaning, row #21928 now contains standard attribute values copied from the gold record.

Aggregations

The Aggregation Ladder

Aggregations are essential for this system to operate effectively. Identities and granularity vary by source. Each source is collected at the granularity of the source. There is a specific granularity for general analysis, all incoming data is aggregated and applied to core facts at the standard granularity. As original granularity is retained, other more specific analysis can be done. This forms an “aggregation ladder” that converges at the core granularity where all audience data is utilized.

During the matching process, the row stores the foreign key of the dimension row that best represents it (the Gold Key). There are two options when creating aggregates: one can aggregate on the dimension’s primary key or on the Gold Key. This process always uses the dimension’s primary key to maintain the unique identity of the product at the standard level.

Higher Aggregations

Beyound the base level, agregations can be made on the dimension’s Gold Key. This reduces the number of rows in the aggregate table, but also loses the true dimensional identity. If there are significant changes to the dimension’s content, you will need to regerate the aggregation. This is something normally done during low usage periods.

Latency

The need to create this aggregate before data is consumed introduces a delay. This delay is intentional, as data sources are often interupted. This delay allows the system to assess and report data quality and load status to consumers.

Conclusion

This dimension is a easy way to collect similar data about the same event in a way it can be used immediately. By tying the association between a fact and dimension on the source’s natural key you assure future data from that source will reference the same dimension row.

This allows you to correct the dimension row so it refects the same attributes as other rows for the same Product. This allows you unrestricted opportunity to clean and conform attributes without disturbing fact tables.